An exploration of voice visualization to improve Google Nest Hub Max's user experience.

PROJECT BRIEF

Google Nest Hub Max is the ultimate digital screen-based device that helps you connect and manage your life. You use it as a digital photo frame, control for all the household’s compatible devices, make video and audio calls.

For this project, I worked together with Ioana Oprescu, and the design challenge was to explore the visual representation of verbal communication. When talking on the phone, what can the voice look like?

USER PERSONA

In order to solve the challenge we first needed to ask who was the person we were designing for? What is their relationship with this product and service? What interface problems can we solve for them?

Thus, we created a detailed user

persona who had very specific needs.

THIS IS EMMA SANCHÉZ

She is a millennial Venezuelan young woman. She is first generation born in the USA, which makes her bilingual.

She is 27 years old and lives in Brooklyn, New York.

She wants to get closer to her Grandmother, who lives in Florida. They share their love for food. Emma, however, has

a hard time understanding her Grandmother’s Spanish sometimes. Google Nest’s Voice Visualization technology helps out with anylanguage barriers.

THE PROBLEM

Emma, like many other users around the world, has to communicate with people who don’t speak the same language at the same level of fluency. This can lead to

many misunderstandings.

THE SOLUTION: VOICE TRANSLATOR

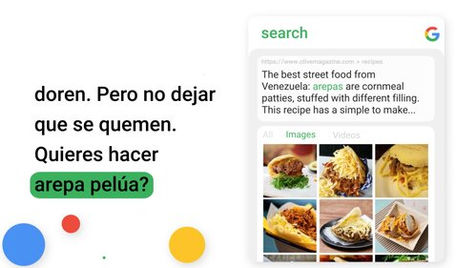

As a solution, we thought of an AI technology service that transcripts your conversation live and picks up on keywords each person is saying. It then highlights those words linking them to other Google services like Google Translator, Google Conversion, and Google’s main search engine.

Google’s Voice Translator makes everyday conversations between people easier and conflict-free.

INITIAL EXPLORATION

When Ioana and I first started working on this project, we focused on aesthetic and sound-reactive design and animation. We ignored both the Google Branding and the UI/UX characteristics of this project. We did that with the naive and hopeful belief that we would come up with a unique and genius representation of sound.

Even though that initial process was insightful, we failed to determine what was the real design problem we were meant to solve. It was only after we spoke with Sharon Harris, an RCAD Motion Design alumni who works at Google, that we realized we needed to frame our design process in the context of improving the user experience. That framework really changed our design direction going forward. And that is when we created Emma. Basing her struggles on our personal experiences as bilingual students.

Further Exploration

Once we created the user persona, Emma, we explored different variations of simple designs that would mimic google’s geometric, minimalistic yet playful brand.

We also explored the idea of making the experience of voice visualisation less “robotic” and “cold”. We achieved that by implementing visual cues, like photos, that would relate to the user’s personal life and their relationship with the person on the phone.

FINAL STAGES OF DESIGN

After a lot of visual exploration of how our voice translator would look like, Ioana and I focused on improving readability for the context of Google Nest Hub Max. Since this device usually stands in shared spaces of the house and is always plugged in to the electricity, most users interact with the device from far away as opposed to a smart phone or a normal tablet.

That distance affects how big the type has to be and how much information one can show on the screen simultaneously.

CREDITS

Creative Direction:

Beatriz Correia Lima

Ioana Oprescu

Sound Design:

Kelly Warner

Universal Production Music

Voices:

Beatriz Correia Lima

Priscilla Diaz

Image Sources:

Daniel Xavier at pexel.com

SOFTWARE USED

Sketch

Adobe After Effects

Adobe Illustrator

Cinema 4D

Octane

Adobe Audition